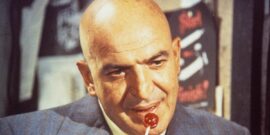

Kojak represents what the American public wants in its cops: unimpeachable integrity, unswerving dedication, and a willingness to sacrifice.

Scientifically Undermining the Rule of Law

Before he turned murderously religious, one of the Belgian bombers had been a bank robber. He fired a Kalashnikov at the police when they interrupted him in an attempted robbery, for which crime, or combination of crimes, he received a sentence of nine years’ imprisonment. Of those nine years he served only four, being conditionally discharged. The principal condition was that he had to attend a probation office once a month: about as much use, one might have supposed, as an igloo in the tropics.

No doubt he underwent various assessments before release establishing his low risk of re-offending; he probably also said before his release that he now realised that shooting policemen with Kalashnikovs was wrong, that he was sorry for it, etc. One of the causes, then, of terrorism in Europe is penological frivolity. A forty-year sentence would have been more appropriate.

In any case, penology is increasingly opposed to the rule of law: it favours the arbitrary and the speculative over the predictable and the certain. A good instance of this tendency was to be found in a paper recently published in the Lancet, one of the most important and prestigious medical journals in the world. Its title was Prediction of violent reoffending on release from prison: derivation and external validation of a scalable tool, and its authors were British and Swedish.

There is nothing intrinsically wrong with trying to predict the likelihood of a person reoffending after release from prison, of course; it is the use to which such prediction is put that may be wrong. The authors tell us in their introduction, for example, that such predictions may be used to help courts in their sentencing of those convicted: and though they do not actually say so, this must mean that the higher the assessed risk of reoffending, the longer or more severe the sentence. It is difficult to see how else such prediction could help the court in its sentencing.

The authors also tell us that the prediction of reoffending may help authorities to decide on dates of release of prisoners. This can mean only that prisoners deemed at low risk of reoffending are to be released sooner than those deemed at high risk, even if their crimes that were proved beyond reasonable doubt were similar or the same. In other words, prisoners are to be punished (or relatively rewarded) not for what they did do, but for what they might do in the future.

This would not be so arbitrary and contrary to the rule of law if the predictions were 100 per cent accurate, or very nearly so: but of course they are not. We find the following self-congratulatory sentence in the summary of the paper: ‘We have developed a prediction model in a Swedish prison population that can assist with decision making on release by identifying those who are at low risk of future violent offending…;’ yet there were both numerous false positives and false negatives to their predictions (more than a third of the prisoners placed at the high end of the spectrum risk did not re-offend, and more than a tenth of those placed at the lower end did reoffend).

The term reoffend in this context is itself an indication that the researchers either did not understand the phenomena that they were researching, or deliberately donned rose-tinted spectacles: for they took reconviction as being coterminous with reoffending, which of course it is not. The Swedish police may be more efficient than most in the elucidation of crime, but they can hardly elucidate every crime. The authors tell us that within two to three years of release, 11263 of 47326 released prisoners (24 per cent) had reoffended violently and 21739 had reoffended in other ways, making a total of 59 per cent.

On the generous supposition than nine out of ten violent offences committed by the released prisoners led to conviction, more than 26 per cent of released prisoners would have reoffended in violent fashion. On the generous supposition that seven out of ten of the released prisoners’ other offences led to conviction, 92 per cent of released prisoners would have reoffended.

Whether the prisoners reoffended or failed to reoffend was counted in binary fashion: yes or no. One, two or a hundred offences counted as one. Swedish criminals may be less productive of crimes than British; but even so, it is likely that the true recidivism rate was more than 100 per cent, as measured by crimes committed and not by convictions. Of this the authors of the paper showed no awareness whatever.

This is not to say that the paper was altogether without interest or value. It informs us that, of the violent offences, 1 per cent were homicide, that is to say, 112 in total. This is probably at least 50 times the rate expected for the age-adjusted population as a whole, which perhaps is not surprising; but when the authors tell us, more or less en passant, that shorter prison sentences are associated with higher rates of violent crime than longer, we may wonder – though the data do not allow us to say so definitively, because the strength of the association is not stated – whether some homicides, at least, would have been prevented by longer prison sentences. (More than half in Sweden are less than 6 months.)

This little fact, however, is interesting for two other reasons. First it flies in the face of the assumption that prison is a school of crime, for the supposed education seems to be in inverse proportion to its length. Second, since longer prison sentences are given to more serious or repeat offenders, the association is exactly the reverse of what would be expected – unless prison exerted a corrective effect (though there is also the effect of aging to be considered).

The authors found, perhaps not surprisingly, that there was a strong association between violent offending and alcohol or drug abuse. This led the authors to suggest that released prisoners with a history of either should be offered assistance to overcome them, a reasonable enough suggestion if such assistance is effective. On that question I am agnostic but sceptical.

The authors were proud that the easily-administered scale that they developed using relatively few variables was about as predictive as the scales doctors use to predict heart attack and stroke. No doubt this confirmed them in their underlying belief that crime is disease, on the basis of the following syllogism:

Heart attacks are a disease.

Heart attacks are moderately predictable.

Reoffending is moderately predictable.

Therefore, reoffending is a disease.

That is why, after all, they published in the Lancet: but no belief undermines the rule of law more thoroughly.